Tokenization is a cornerstone of natural language processing (NLP), machine learning, and data analysis. It transforms unstructured text into a format that machines can understand and process. In this detailed blog, we’ll explore tokenization from its fundamentals to its practical implementation, challenges, and future trends, following the outline provided.

Table of Contents

ToggleIntroduction to Tokenization

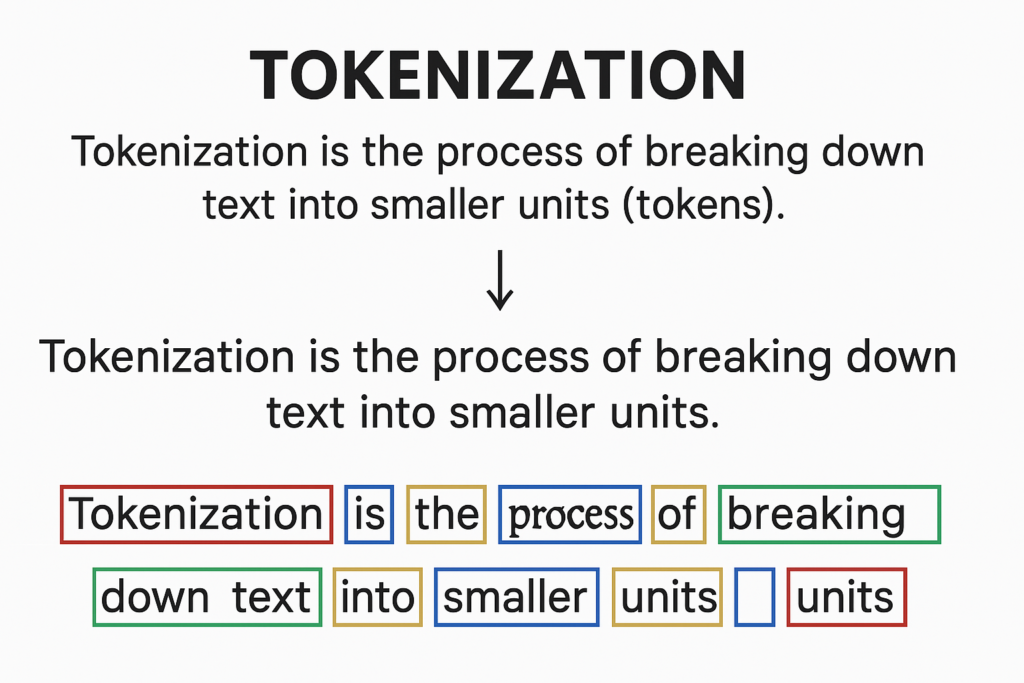

What is Tokenization?

Why is it Important?

Real-World Applications

It have powers numerous everyday technologies:

- Search Engines:Breaks down queries and documents into tokens for matching relevant results.

- Chatbots: Tokenizes user inputs to interpret and respond accurately.

- Sentiment Analysis: Enables word-level analysis to gauge emotions or opinions.

- Language Translation: Splits text into manageable units for translation and reconstruction in another language.

Types of Tokenization

The comes in various forms, each suited to specific purposes:

Word Tokens

This method splits text into individual words, typically using spaces or punctuation as delimiters.

- Example: “I love coding!” → [“I”, “love”, “coding”, “!”]

Sentence Tokens

Here, text is divided into sentences, often based on punctuation like periods or exclamation marks.

- Example: “Hello! How are you?” → [“Hello!”, “How are you?”]

Character Tokens

Text is broken into individual characters, useful for tasks like character-level language modeling.

- Example: “Dog” → [“D”, “o”, “g”]

Subword Tokens

An advanced approach, subword tokenization splits words into smaller, meaningful units. Techniques like Byte-Pair Encoding (BPE) or WordPiece excel at handling rare words or morphological variations.

- Example: “playing” → [“play”, “ing”]

How Tokenization Works

Basic Process

Tokens typically follows these steps:

- Preprocessing: Clean the text—remove noise (e.g., extra spaces), normalize case (e.g., “Hello” to “hello”), and handle contractions (e.g., “don’t” to “do not”).

- Splitting: Divide the text into tokens using delimiters (e.g., spaces, commas) or predefined rules.

- Handling Special Cases: Address exceptions like hyphenated words (“well-known”), abbreviations (“Dr.”), or multi-word expressions (“New York”).

Challenges

The always straightforward:

- Ambiguity: Should “New York” be one token or two? Context matters.

- Language-Specific Rules: English uses spaces, but Chinese doesn’t, requiring segmentation algorithms.

- Special Characters and Emojis: Deciding how to handle “@” or “😊” can complicate the process.

Tools and Libraries

Popular tools simplify tokens

- NLTK: Python library with basic word and sentence tokenization functions.

- spaCy: Efficient, multilingual, and production-ready.

- Hugging Face : Optimized for subword tokenization in transformer models.

- TensorFlow: Includes text processing utilities for this tokens.

Tokens in Different Languages

Language Variations

The adapts to linguistic differences:

- English: Simple, thanks to spaces and punctuation.

- Chinese: No spaces between words, so segmentation algorithms are needed.

- German: Long compound words (e.g., “Donaudampfschiff”) may require subword splitting.

Cultural and Linguistic Considerations

Grammar, scripts, and writing systems influence tokenization. For instance, agglutinative languages like Turkish (where words grow with suffixes) benefit from subword methods to manage complexity.

Tokenization in Machine Learning and AI

Role in Models

Vocabulary Creation

Tokens form a vocabulary—a mapping of tokens to indices. Vocabulary size affects performance:

- Large Vocabulary: Captures more detail but increases computation.

- Small Vocabulary: Efficient but risks missing rare words (out-of-vocabulary issues).

Example Models

- GPT: Uses BPE for subword tokenization.

- BERT: Relies on WordPiece to manage vocabulary and rare words.

- T5: Employs subword tokenization for flexibility.

Benefits of Tokens

- Improved Analysis: Tokens enable pattern recognition, entity extraction, and sentiment scoring.

- Efficiency: Smaller units streamline processing of large text datasets.

- Customization: Rules can be adjusted for domains like medicine or law.

Limitations and Challenges

- Loss of Context: Splitting “kick the bucket” into [“kick”, “the”, “bucket”] may obscure its idiomatic meaning.

- Overhead: Subword tokenization on massive datasets can be computationally expensive.

- Ambiguity and Errors: Slang (“lit”), idioms, or jargon (“blockchain”) may be mishandled.

History of Tokenization

- Early Beginnings

Tokens emerged in the 1950s and 1960s with early computational linguistics. Rule-based systems used simple delimiters (spaces, punctuation) to parse text. - Evolution in NLP In the 1980s and 1990s, statistical NLP introduced regular expressions and probabilistic models, enhancing tokens sophistication.

- Modern Era

The 2010s saw a leap with machine learning and deep learning. Subword tokenization (e.g., BPE, WordPiece) became prominent in models like Google’s BERT and Facebook’s neural machine translation systems. - Key Milestones

2001: NLTK’s release democratized NLP tools.

2017: Transformer models revolutionized tokens with advanced subword techniques.

Implementing Tokens

Step-by-Step Guide

- Choose a Tool: Pick based on needs—NLTK (simple), spaCy (robust), or Hugging Face (transformers).

- Preprocess Data: Remove noise (e.g., HTML tags), normalize text, and handle special characters.

- Apply Tokenization: Split text using the tool.

- Evaluate and Adjust: Check for errors (e.g., “U.S.” split wrongly) and refine.

Example Use Cases

- Chatbots: Tokenize “Schedule a meeting” → [“Schedule”, “a”, “meeting”] for intent detection.

- Sentiment Analysis: Tokenize reviews for word-level scoring.

- Search Engines: Index documents with tokenized terms.

Code Snippet

Here’s a simple example using NLTK:

import nltk

from nltk.tokenize import word_tokenize

text = "I enjoy coding in Python!"

tokens = word_tokenize(text)

print(tokens) # Output: ['I', 'enjoy', 'coding', 'in', 'Python', '!']

Future Trends in Tokenization

Advancements in NLP

New models may reduce reliance on traditional tokenization, favoring character-level processing or learned token boundaries.

Multilingual Models

Models like XLM-R handle multiple languages, demanding flexible tokenization methods.

Integration with AI

The will evolve for real-time applications like generative AI and chatbots, prioritizing speed and efficiency.

FAQs

Tokens splits text into tokens; lemmatization/stemming reduces words to their root (e.g., “running” → “run”).

No—space-based languages (English) differ from non-spaced ones (Chinese).

Yes for most NLP tasks, though some character-level models bypass it.

They use subword methods like BPE for flexibility.

Over-splitting abbreviations or losing multi-word phrase meanings.

Practical Examples and Code Snippets

Simple Example

Using spaCy for word tokens:

import spacy

nlp = spacy.load("en_core_web_sm")

text = "I love coding in Python!"

doc = nlp(text)

tokens = [token.text for token in doc]

print(tokens) # Output: ['I', 'love', 'coding', 'in', 'Python', '!']

Real-World Scenario

For a search engine, tokenize a query:

- Input: “Find flights to New York”

- Output: [“Find”, “flights”, “to”, “New”, “York”]

This enables matching against indexed documents.

Conclusion

This is a foundational process that bridges human language and machine understanding. From its early rule-based origins to its role in cutting-edge AI, it remains a vital tool, continually adapting to new linguistic and technological challenges. Whether you’re building a chatbot or analyzing sentiment, mastering tokenization is key to unlocking the power of text data.